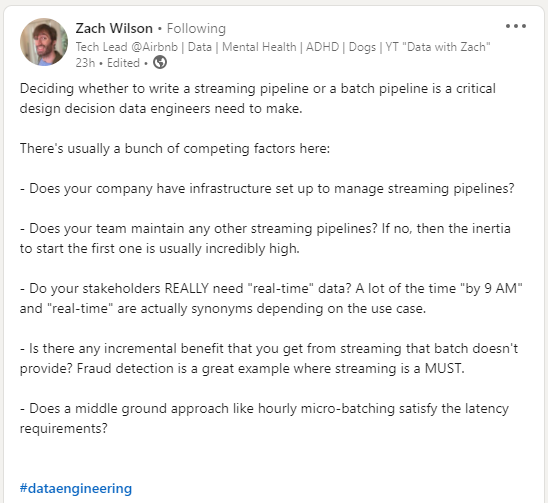

On batch vs stream.

Earlier today, one of my favorite follows on LinkedIn raised an awesome question. I was going to just answer there but couldn't stop typing so here's a blog post instead.

One overall point is that it all depends on how complete the data strategy is. There's a common mix-up between real-time and continuous: real-time is an SLA that ultimately boils down to who you're asking - and dashboard consumers will typically tell you "XX minutes". But look further (automation, activation, support, security & fraud… ) and you'll find a whole new set of consumers with different expectations: events and sub-minute availability. For these consumers you can't know WHEN the "thing" happens but when it does, it needs to be modelled and served in real-time (per the system's SLA). You can't reasonably expect them to execute entire DAGs at sub-minute intervals to figure out if any model contains a trigger or a feature. The perverse effect of thinking about real-time as "how often should this be refreshed" is that it basically reverse-engineers a report out of any use-case.

Altogether it seems batch strategies are being kept in place by dashboard-driven organizations. But more and more organizations are crossing that gap and heading into data-driven operations and "real real-time". These allow Analytics Engineers (among others) to have insane operational contributions that extend way beyond reports. This game of questioning real-time out of requirements and narrowing down use-case to dashboard (partly to avoid spinning up a new stack) becomes harder as the completeness of data strategies evolves.

OBVIOUSLY I'm biased on this one (coming from the other side of that question) but here are some final point-to-point thoughts:

- Having infrastructure setup is a one-off and the new gen of data tools has fully integrated the SAAS practices. The cost of entering continuous data operations is now marginal and the cost of operating them has drastically collapsed.

- That's a self-fulfilling prophecy, you HAVE to start somewhere. Due to the depth of modern DAGs it may be that starting with a rightmost model is excruciating but I would argue that this isn't starting small and "narrowing down use-cases" should involve looking at less enriched, less modelled datasets.

- Very true for reports but I'll spare you another rant.

- Is there any incremental benefit that you get from batch that abiding to serviceable outputs doesn't provide? Technical bar and toolbox completeness are excellent ones.

- "We hesitated between going to the post office every day and receiving our mail so we settled for going to the post office every second". Ok, a bit harsh but you get the idea.

To me this activation of data is where legacy strategies fail. In the Modern Data Stack, CDP providers have understood this and are now the most advanced components but the rest of the stack is still a churning point (particularly around core activation processes like Modelling and Reverse-ETL).

Have trouble operationalizing your data? Let's chat - [email protected]